RAID Weirdness

Recently I decided to change my whole network infrastructure. One part of this project was to replace my existing Windows machine, a Core i7 950 LGA 1366 based machine, with a new Core i7 3930K LGA 2011. Since enough things changed, I decided to sell my existing Windows machine and build a new one.

I did however decide to keep my Adaptec 3805 PCIe x4 RAID card that used to run in the existing Windows machine (3 x 1.5TB Seagate Barracuda 7200 rpm SATA II HDD's in RAID5, and 2 x 1TB Seagate Barracuda ES.2 7200 rpm SATA I hard drives in RAID1). I needed this space and since hard drives are so expensive with the floods in Thailand, it just made sense to keep it.

So on the new system I have this configuration:

- Intel Core i7 3930K 6-Core CPU

- Asus P9X79 Pro LGA 2011 Motherboard

- 32GB DDR3-1600 RAM

- nVidia GTX 580 1.5GB GPU

- Dell U2711 27" LCD

- Corsair Cooling Hydro Series H100

- Seasonic Platinum 1000W ATX PSU

- 2 x OCZ Vertex 3 240GB SSD's in RAID0 on built in Intel X79 RAID controller as main OS/Apps

The Adaptec RAID controller had been with me for the past 3 years, and worked without a fault. However, when I moved it to the new system, I ran into issues. Initially it booted up the two RAID volumes just fine, but performance was degraded. I noticed that copying a large amount of photos over the network to the server took increasingly longer and longer to complete. The copy rate dropped off to below 10MB/s. Later, when I copied data from the 1TB RAID1 volume on the Adaptec to the RAID5 volume on the same controller, the copy slowed down to 4MB/s. Something clearly was messed up.

Two days later I got woken up by the wonderful sound of my RAID alarm. RAID1 failed, in specific, CN1 Device 0. I had the RAID5 on CN0 devices 0,1,2, and RAID1 on CN0 device 3 and CN1, device 0. The error was that the drive disappeared from the system. It detected the drive by itself after a little while and started rebuilding the RAID1 set. This took many hours. Once done, it failed within 10 minutes again. Same thing. I checked the cables - all were good. So I was baffled. I assumed either the CN1 cable was bad, or the RAID card, or the hard drive. So I purchased 3 x 2TB Western Digital Caviar Black drives. Disconnected the RAID1 set, and connected the new RAID5 on CN1 device 0, 1 and 2. I initiated a build/verify on the RAID5 volume, and after a gruelling 24 hours it finished. This duration was absurdly long. Less than one day after the build/verify completed, the exact same thing happened, device 1 on CN1 dropped out of the set. I rebuilt it, and then device 2 dropped out. I therefore confirmed that it was not the hard drive that was bad, nor the specific CN1, device 0 port.

Next step - replace the card as I could not find any SATA breakout cables. As usual, I purchased the best I could afford - the LSI MegaRAID SAS 9265-8i Kit RAID PCIe 8x RAID card. This card was PCIe 8x and supported SATA 6Gbps, with 1GB RAM as cache. The Adaptec only had 128MB. I installed the LSI card in a PCIe x16 slot next to the Adaptec, and moved the 3 x 2TB over to CN0 devices 0,1 and 2. A RAID5 volume was set up and initialised. After 4.5 hours the build was done. This was much better. Next step was to initiate a copy of data from the RAID5 on the Adaptec to the RAID5 on the LSI. This took a long time as the read speed on the Adaptec was horrific. Once done, I moved the 3 x 1.5TB RAID5 from the Adaptec to CN1 device 0,1and 2 on the LSI, and removed the Adaptec card.

One day later the LSI's RAID alarm went off… One of the old RAID5's drives stopped responding and dropped out of the set… CN1 device 1. I was stumped. EVERYTHNG was replaced. The RAID card, the SATA breakout cable, the power connector from the PSU to the hard drives, the hard drives was not replaced however these were working before in the Adaptec even when the 2x1TB RAID1 failed. And I know these hard drives are fine. I could not see how the motherboard could have any effect, as the RAID card abstracts the SATA drives. The PSU is more than powerful enough at 1kW.

In the end the ONLY thing that has worked (so far, it's been a couple of days) was to move the 3 x 1.5TB old RAID5 array to my Linux machine on the Adaptec RAID controller CN0 device 0,1 and 2, and leave the LSI with only the new 3x 2TB RAID5 on CN0 device 0,1 and 2. This means I wasted a LOT of money on a card with 8 ports when I can clearly only seem to use 4 at a time.

This issue is still unresolved, but at least it seems like I have two working RAID5 volumes now, although not in the same machine.

As far as the performance goes, here are some interesting results.

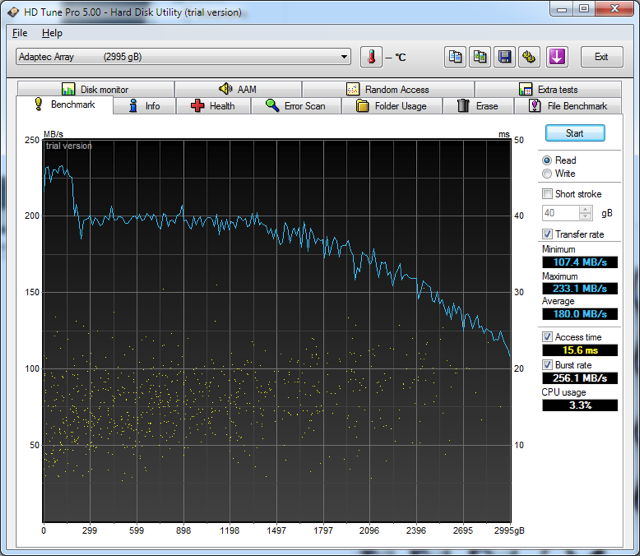

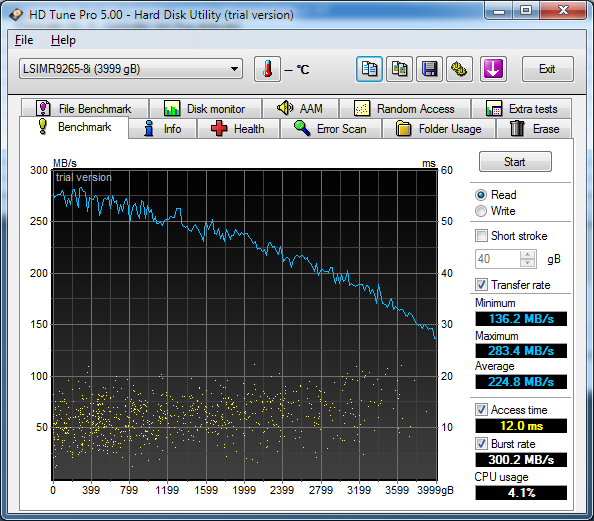

First day I installed the Adaptec RAID card in the new machine, I got acceptable results for the 3TB RAID5 array:

Clearly the graph does not read as it should, there seems to be some artificial limitation. It should start high and drop off.

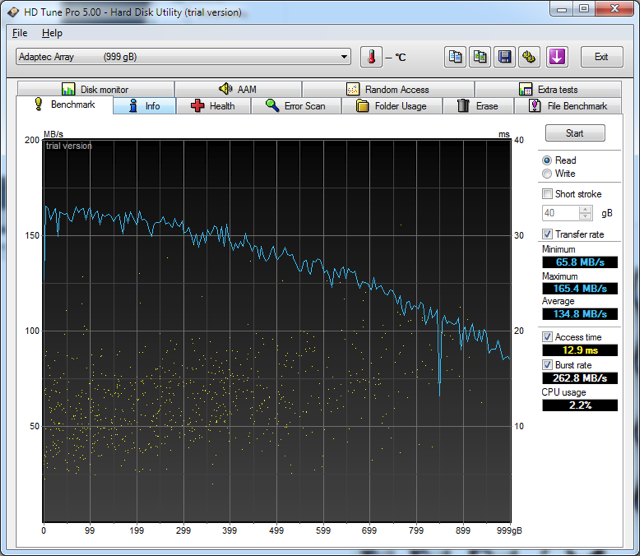

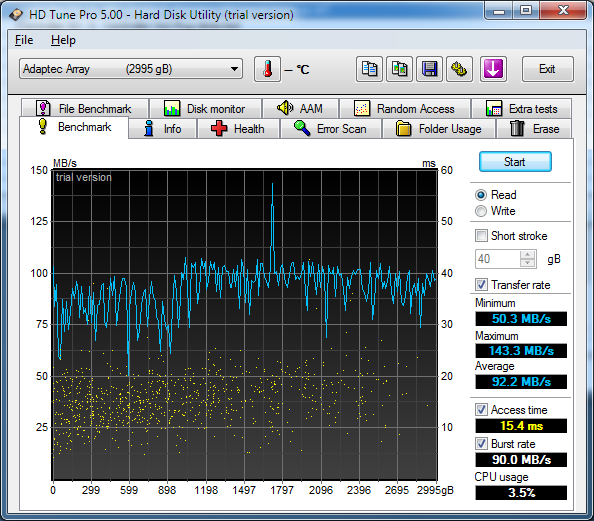

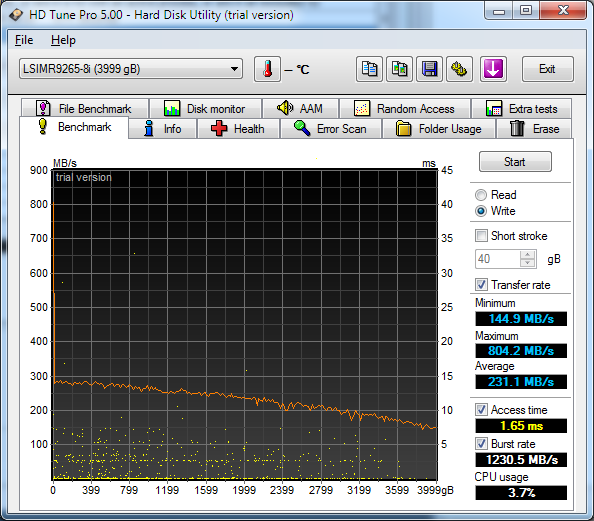

Once I moved the Adaptec out of the new machine, and removed the extra RAID5 volume this is how the new 4TB RAID5 volume on the LSI performs:

The initial spike at 804MB/s is the 1GB cache filling up, then normal write speeds resume. I am happy with the write speed.

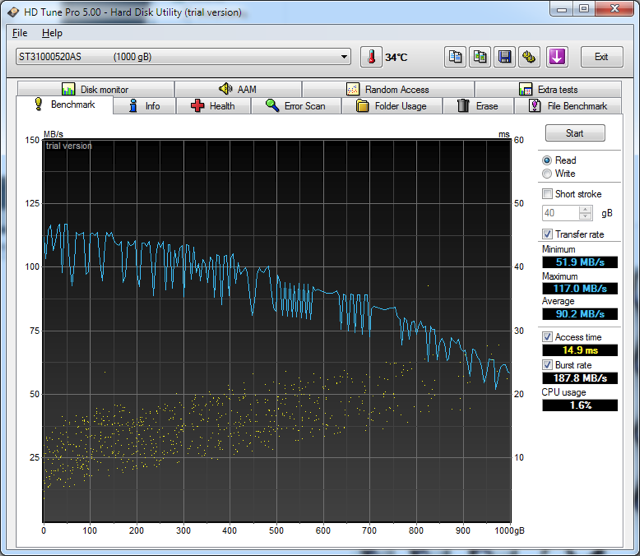

The USB3 1TB external hard drive performed well too:

It is clear that this drive is limited by the actual hard drive and not the bus, like the case would have been on USB 2.0.

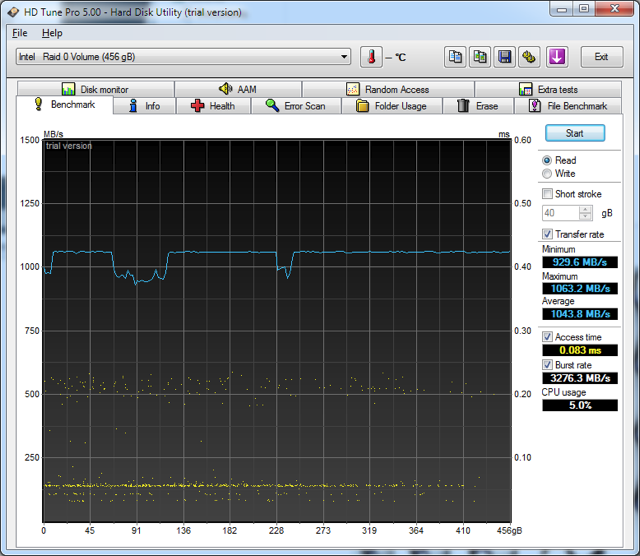

Lastly, here is the insane speed I get on the RAID0 SSD OS/Application volume: